ChatGPT的Transformer是怎么产生智能的?

版主: verdelite, TheMatrix

Re: ChatGPT的Transformer是怎么产生智能的?

Transformer 不被认为是优秀的模型,氮素OpenAI改进了训练,得到了不错的进步, 巨硬顺便在商业市场上推了一把,夺得先机

被毁掉的AlphaGo 用了 1202个CPU 176个GPU

GPT3.5 10000个GPU

GPT4 40000个GPU

一个GPU 用电 USD20-36/月

看谁玩得起

据说 Scary AI on its way。 等着看, 机器玩死人,还是人扼杀机器? 呵呵呵

被毁掉的AlphaGo 用了 1202个CPU 176个GPU

GPT3.5 10000个GPU

GPT4 40000个GPU

一个GPU 用电 USD20-36/月

看谁玩得起

据说 Scary AI on its way。 等着看, 机器玩死人,还是人扼杀机器? 呵呵呵

Re: ChatGPT的Transformer是怎么产生智能的?

"attention is a technique that is meant to mimic cognitive attention."

attention看起来也很简单,所谓的softmax就是指数加权

https://wikimedia.org/api/rest_v1/media ... f51f905b08

softmax在统计学里面并不是新东西。它增强了大的,抑制了小的,这就是所谓的注意力?

attention看起来也很简单,所谓的softmax就是指数加权

https://wikimedia.org/api/rest_v1/media ... f51f905b08

softmax在统计学里面并不是新东西。它增强了大的,抑制了小的,这就是所谓的注意力?

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

Re: ChatGPT的Transformer是怎么产生智能的?

softmax不是attention。transformer里面有三个矩阵K,Q,V。具体怎么弄的,我一直也没有完全吃透。FoxMe 写了: 2023年 4月 4日 15:48 "attention is a technique that is meant to mimic cognitive attention."

attention看起来也很简单,所谓的softmax就是指数加权

https://wikimedia.org/api/rest_v1/media ... f51f905b08

softmax在统计学里面并不是新东西。它增强了大的,抑制了小的,这就是所谓的注意力?

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

Re: ChatGPT的Transformer是怎么产生智能的?

ChatGPT explained:

The Transformer model is composed of an encoder and a decoder, each containing multiple layers of self-attention and feedforward neural networks. The encoder processes the input sequence, while the decoder generates the output sequence. Both the encoder and decoder layers use residual connections and layer normalization to improve training stability.

The self-attention mechanism used in the Transformer model allows the model to learn which parts of the input data to attend to, and how much weight to give to each part. This is achieved by computing attention weights for each position in the input sequence based on its similarity to other positions. These attention weights are then used to compute a weighted average of all the input positions, which is then used to produce a context vector for each position.

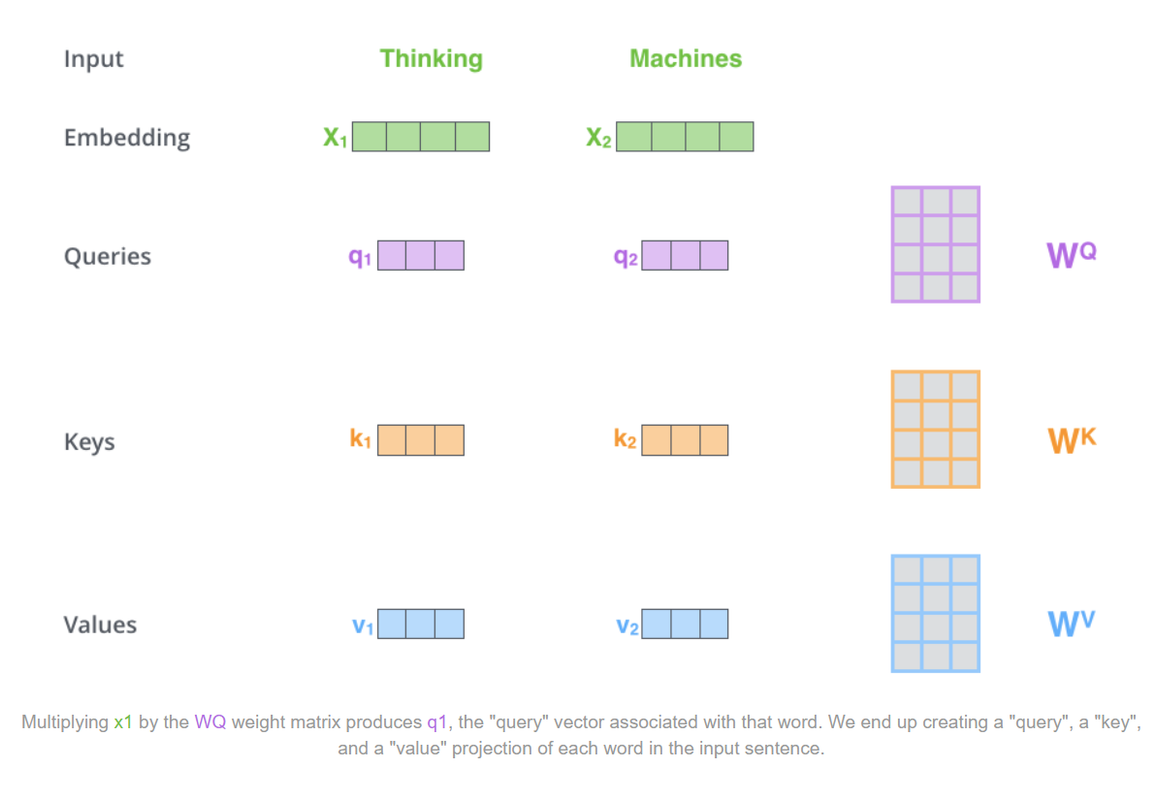

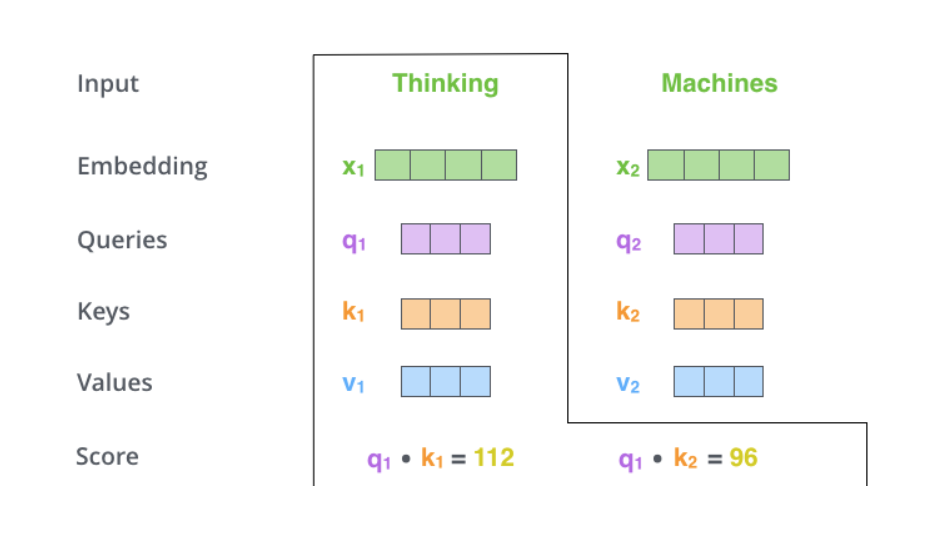

In self-attention, the input sequence is transformed into three vectors: a query vector, a key vector, and a value vector. These vectors are obtained by multiplying the input sequence with learned weight matrices. The dot product of the query vector with the key vector produces a score for each position in the input sequence. The scores are normalized using a softmax function to obtain attention weights, which are used to weigh the corresponding value vectors. The weighted value vectors are then summed to obtain the final output representation.

These vectors are used to compute a weighted sum of values that are relevant to a given query, based on their similarity to the key vector.

Query Vector: A query vector is a representation of a specific input or context that is being used to retrieve relevant information from a set of values. In the context of attention mechanisms, the query vector is used to compute the similarity between the query and the key vectors.

Key Vector: A key vector is a representation of a value or set of values that is being searched for relevance to the query vector. In the context of attention mechanisms, the key vector is used to compute the similarity between the query and value vectors.

Value Vector: A value vector is a representation of a piece of information that is associated with a specific key vector. In the context of attention mechanisms, the value vector is used to compute a weighted sum of values that are relevant to the query, based on their similarity to the key vector.

A query vector is a mathematical representation of a search query in a vector space model, which is a common approach used in information retrieval systems to match search queries with relevant documents.

In a vector space model, each document and query is represented as a vector of numerical values that correspond to the presence or absence of certain terms in the document or query. The terms used in the model are typically identified by a process called term extraction or feature selection, which identifies the most important words or phrases in the document corpus.

To create a query vector, the search query is typically preprocessed to remove stop words and other irrelevant terms, and then converted into a vector representation using the same set of terms as the document vectors. This vector can then be used to measure the similarity between the query and each document in the corpus, usually by calculating the cosine similarity between the query vector and each document vector.

In the context of attention mechanisms, the key vector is a vector representation of a set of values or pieces of information that are being searched for relevance to a given query vector. The key vector is used to compute the similarity between the query vector and each value vector, which in turn is used to weight the contribution of each value vector in the final output of the attention mechanism.

The choice of the key vector representation can have a significant impact on the performance of the attention mechanism. In some cases, the key vector can be a direct copy of the value vector, while in other cases, the key vector can be a transformed version of the value vector.

One common approach for generating the key vector is to use the same linear transformation that is used to generate the query vector. This is known as the dot-product attention mechanism, where the similarity between the query and key vectors is computed as the dot product of the two vectors. Another approach is to use a separate linear transformation to generate the key vector, which is then combined with the query vector using a learned function to compute the similarity between the two vectors.

During the self-attention computation, each query vector attends to all key vectors in the input sequence, and the attention scores determine how much weight to assign to each value vector when computing the output. The output is then computed as a weighted sum of the value vectors, where the weights are determined by the attention scores.

The value vectors are important because they provide the actual information that is used to compute the output of the self-attention mechanism. By attending to different subsets of the value vectors with different weights, the self-attention mechanism can selectively emphasize or suppress different aspects of the input sequence, enabling the model to capture complex relationships between different elements of the sequence.

上次由 TheMatrix 在 2023年 4月 5日 14:25 修改。

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

Re: ChatGPT的Transformer是怎么产生智能的?

In a Transformer model, the encoder is responsible for processing the input sequence and creating a representation of it that can be used by the decoder to generate the output sequence.TheMatrix 写了: 2023年 4月 5日 13:43 ChatGPT explained:

The Transformer model is composed of an encoder and a decoder, each containing multiple layers of self-attention and feedforward neural networks. The encoder processes the input sequence, while the decoder generates the output sequence. Both the encoder and decoder layers use residual connections and layer normalization to improve training stability.

The encoder consists of multiple layers of self-attention and feedforward neural networks. The self-attention mechanism allows the model to focus on different parts of the input sequence at different times, while the feedforward network applies a non-linear transformation to the output of the self-attention layer.

During each layer of the encoder, the self-attention mechanism calculates the attention scores between all the words in the input sequence, which are then used to compute a weighted sum of the input embeddings. This weighted sum is then combined with the original input embeddings to create a new representation of the input sequence that takes into account the contextual information of the other words in the sequence.

After the self-attention layer, the feedforward network applies a non-linear transformation to this new representation, which is then passed on to the next layer of the encoder. This process is repeated for each layer of the encoder, allowing the model to gradually refine its representation of the input sequence.

The final output of the encoder is a sequence of vectors that represent the input sequence, with each vector containing information about the context of the corresponding word in the sequence. These vectors are then passed on to the decoder, which uses them to generate the output sequence.

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

Re: ChatGPT的Transformer是怎么产生智能的?

In a Transformer model, the decoder is one of two main components (the other being the encoder) used for sequence-to-sequence tasks such as machine translation, text summarization, and question answering. The decoder takes in the output of the encoder, which is a set of encoded representations of the input sequence, and generates the output sequence.TheMatrix 写了: 2023年 4月 5日 13:43 ChatGPT explained:

The Transformer model is composed of an encoder and a decoder, each containing multiple layers of self-attention and feedforward neural networks. The encoder processes the input sequence, while the decoder generates the output sequence. Both the encoder and decoder layers use residual connections and layer normalization to improve training stability.

The decoder is a stack of N identical layers, where each layer contains two sub-layers:

Masked multi-head self-attention layer: This layer allows the decoder to attend to all positions in the decoder up to and including the current position, while preventing it from attending to subsequent positions. This is achieved using a masking technique that assigns a large negative value to the attention weights of the masked positions, making them effectively invisible to the attention mechanism.

Multi-head attention layer: This layer allows the decoder to attend to the output of the encoder. It takes in the output of the previous layer and the encoded representation of the input sequence and generates a weighted sum of the encoder outputs. This helps the decoder to extract relevant information from the input sequence and generate the output sequence.

After these two sub-layers, each layer has a feed-forward network applied to it, followed by layer normalization. The output of the final layer is fed into a softmax activation function to generate the probability distribution over the vocabulary for the next token in the output sequence.

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

Re: ChatGPT的Transformer是怎么产生智能的?

In a Transformer model, the key vectors are usually selected as a function of the input sequence of tokens, using learned weights or embeddings. Here are the general steps:TheMatrix 写了: 2023年 4月 5日 13:43 ChatGPT explained:

In self-attention, the input sequence is transformed into three vectors: a query vector, a key vector, and a value vector. These vectors are obtained by multiplying the input sequence with learned weight matrices. The dot product of the query vector with the key vector produces a score for each position in the input sequence. The scores are normalized using a softmax function to obtain attention weights, which are used to weigh the corresponding value vectors. The weighted value vectors are then summed to obtain the final output representation.

Embedding: The input tokens are first converted to vectors using an embedding matrix. The embedding matrix is a learnable parameter matrix that maps each token to a high-dimensional vector representation.

Linear Transformation: The embedded input tokens are transformed using a linear transformation to obtain a sequence of keys. The transformation matrix is another learnable parameter matrix, which is used to project the embeddings into a key space.

Splitting: The sequence of keys is then split into multiple smaller sequences or "heads". This allows the model to attend to multiple aspects of the input simultaneously.

Scaling: Each key vector is then scaled by a scalar factor to avoid the "vanishing gradients" problem during training.

Masking: Optionally, some of the keys may be masked to prevent the model from attending to certain parts of the input sequence, such as padding tokens.

Attention: The keys are then used to compute attention scores between the input tokens, which are used to weight the importance of each token in the output sequence.

Overall, the key vectors in a Transformer model are learned as part of the training process, and are optimized to capture meaningful features of the input sequence.

-----

In a Transformer model, query vectors are typically generated as part of the self-attention mechanism. The self-attention mechanism is used to compute attention weights between each token in the input sequence and all other tokens in the same sequence.

To generate query vectors, the Transformer model first applies three learned matrices, often referred to as the key, query, and value matrices, to the input embeddings. These matrices are used to project the input embeddings into three different spaces, allowing the model to learn different aspects of the input.

The query matrix is used to generate the query vectors. Specifically, for each input token, the query matrix is multiplied by the corresponding embedding vector to generate a query vector. These query vectors are then used to compute attention scores between the input tokens and other tokens in the same sequence.

The attention scores are used to compute a weighted sum of the value vectors, which are also generated using the key and value matrices, to obtain the final representation of each token in the input sequence.

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

-

TheMatrix

- 论坛支柱

2024年度优秀版主

TheMatrix 的博客 - 帖子互动: 275

- 帖子: 13587

- 注册时间: 2022年 7月 26日 00:35

Re: ChatGPT的Transformer是怎么产生智能的?

其实归纳总结不难,难的是创造和取舍

COMMON SENSE对GPT也是个不可逾越的鸿沟

COMMON SENSE对GPT也是个不可逾越的鸿沟

上次由 BCQ1 在 2023年 4月 5日 16:59 修改。